the future i want to be in

tiny life update: i am joining Anthropic, AI safety research lab.

“We do not know of languages beyond the genetic code and natural languages, but that doesn’t mean there are none. I believe such ‘other languages’ exist and that the letter was composed in one of them.” — P. Hogarth

Sometimes you have to break up with things you used to be fascinated about in order to redefine your relationship with them.

As you might know, I left architecture major during my first year in the university, feeling an urge to allow myself to get lost within other disciplines. Carlo Ginzburg puts it very well here, "Architecture is not a prison or a fortress, architecture is a port, a place from which you go in different directions." I always loved and will be constantly inspired by architectural design, but sometimes you have to quit entirely to figure out what is in its nature that really speaks truth to your inherent fascination. Instead, a year ago I fell in love with the architectural design and development of neural nets in machine learning.

As I was walking through the streets of Berlin, where much of its layout and architecture reminds me of my hometown in Eastern Ukraine, I was reminded of the beautiful balls in Jane Austen's novels, sensual waltzes in War and Peace, and Anna Karenina's dance with Vronsky. It was important not because people had nothing to brighten up the evening in the absence of personal computers. Those were the rare moments when contiguity was allowed. The era of hand-written letters, printed books, and long-awaited meetings seemed to bring up a huge palette of dissimilar human sensations, which faded away in modern contexts.

As with every technology, whether it is a printing press or automobiles, there comes questions about its fundamental principles and its impacts on human culture. I’m fascinated by the inherent mechanics of neural nets, how they interpret and learn parts of our humanity with the data, and ways such technology will get mediated in our interactions with each other. When I bounce ideas in my personal note-taking tool Synevyr with GPT-3 or other non-public models to get feedback on research projects, recommendations on places to visit during my travels, learn new theoretical concepts, or create fun poems — all of this makes me think about what comes next.

Whether it’s generative modeling, continual multimodal systems, or large language models I do believe this technology will afford much closer contiguity between humans and computers we will ever see. It will allow humans to interact with machines more naturally to overcome the limitations of each other’s intelligence to unlock higher creative potential and new kinds of knowledge production in our human progress. My view is that somewhere there is a magical and fun future, where our abilities to tell new stories, create shared things, cope with emotions/life problems, and much more will be fundamentally transformed. If we try hard to imagine such futures, we will inevitably be there quite soon.

I also think to achieve this rigorous scientific and policy work on AI robustness, fairness, interpretability, and alignment are deadly critical to get it done right. I think that approaching neural nets from a physics perspective and studying them from first principles, empirically, will yield new discoveries that will shape future policies and regulatory frameworks.

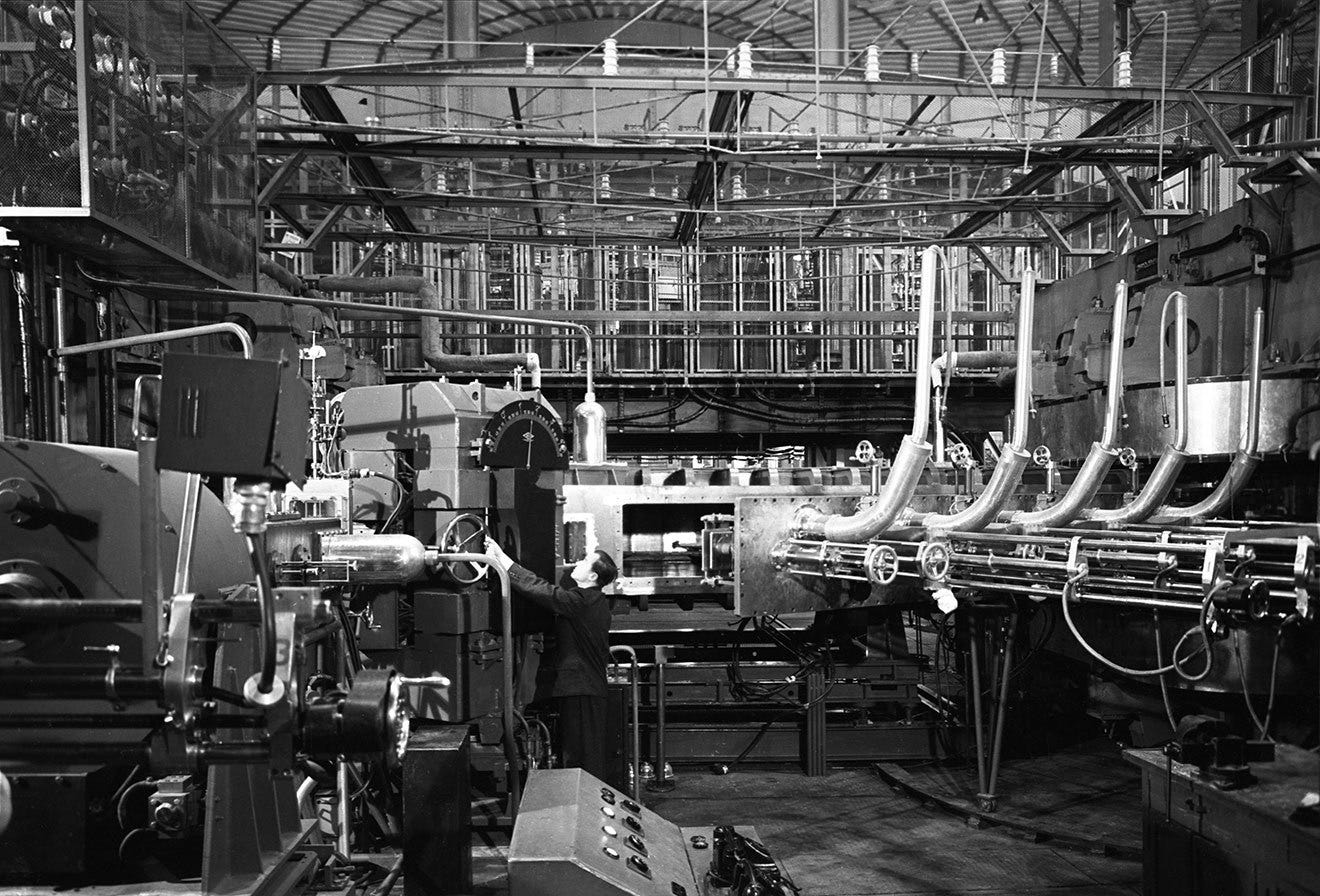

On the one end of the spectrum of how I think about safety in AI is the image of closed nuclear cities, where theoretical physicists and engineers would live and work on the creation of the atomic bomb. Nuclear urbanism also meant the radiation safety measures had to be carefully planned out in architectural spaces by zones. Any leak from the “dirty” zone (radioactive) through research notebooks or clothes would lead to an unsafe environment.

I’ve definitely learned the ugly side of our humanity through my work in investigative projects: Russian systemic cybercrimes on critical infrastructure, Myanmar’s body snatching system during military coup, the deployment of French weapons by Lebanese forces, the Capitol Riot, unjustified arrests in Portland, Gaza airstrikes. Oftentimes than not war crimes are committed due to the open-ended capabilities of technologies. Similarly, the open-endedness of objective functions in machine learning systems and the capability to tune models to specific inputs and outputs will have the worst detrimental consequences when in hands of bad actors, and this is what partly AI safety is about. Just as we need to worry about cyber-security to protect our computer networks from attack and the dual use of nuclear weapons, we need to worry about AI systems from its misuse. If we take the route of nuclear weaponry in AI then we will end up with yet another set of deterrence policies that will fail us inasmuch as they are failing us right now.

On another end of the spectrum are historical images of the 1920s introduction of automobiles in American cities and the strong public reaction to the “deadly machines that were killing people.” Like in cars and planes, where safety measures were developed over time through rigorous science and various policy regulations, it is equally relevant for AI.

The public perception of cars has gradually changed and now we think of them as an additional commodity in our life. The image of an automobile embodies a single entity of technology — the car itself with variations in size, colors, prices, brands, add-on features, and the contexts of use (sports vs. daily life). But, what’s fascinating and different about AI is that there are numerous and almost infinite amounts of such entities. It’s not a model that we take variations from, although we can (aka foundation models), but the infinite amount of creative entities we can produce just through the ideas this technology allows us to experience.

ML safety for me means a holistic system of componentry from difficult research questions, creatively deployed outputs, and policy storytelling. Training continuous models in a predictable manner with full transparency of their reasoning process, and assigning higher confidence scores to low-risk behaviors, is an example of one of many research questions. Innovating new interfaces for the reliable deployment of models for humans to creatively interact with AI affordances is the work to be done by intentional engineering and design processes. Preventing misuse and incentivizing the markets to build safer and more interpretable systems is the work that should be done by policymakers and lawmakers.

I can’t think of any better place to shape the future I want to build as I do with Anthropic which does it all very well at the highest quality of scientific rigor and collaboration with the policy world. It is a stellar team of researchers and engineers, whose work I deeply admire. As a new grad, I’m very humbled to be joining their team as a Member of the Technical Staff to do my best work and vigorously study the language of neural nets, and think of the most creative ways to bring the future I want to live in. And I can’t wait to share more about this journey with you all.

Karina.

Karina, this is awesome! Congratulations on joining Anthropic. I love the work y'all are doing. I am working on a coaching application and would love to build with Anthropic. I know you are probably not the right person to ask about API access, but could you point me in the right direction of someone who is? Sorry to be asking here on this forum :)